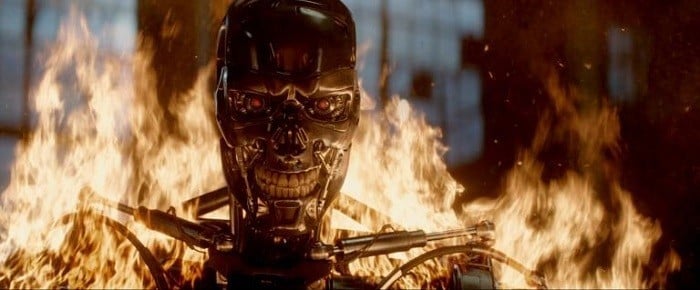

Is technology advancing toward Terminator: Genisys-style artificial intelligence? Image Source: Paramount Pictures

In 2014, the United States was feeling a sense of renewed vigor from the Edward Snowden revelations. It had been a year since the first top–secret NSA documents were published, Snowden was being charged under the Espionage Act, the U.S. government was trying to extradite him from Russia and Wired had just put him on the cover of their magazine. But one revelation could have the biggest repercussions of the entire Snowden affair: “MonsterMind”, a Skynet-esque cyber warfare program straight out of Terminator Genisys (just released on Blu-ray and Digital HD).

The government went with a far less subtle name in MonsterMind than Terminator‘s nefarious Skynet, but the parallels are there: Just as the fictional Skynet was supposed to be able to detect and respond to threats to the country without any human aid, Snowden claims MonsterMind will have the ability to detect incoming cyber attacks and retaliate without human interference. (It is to be hoped the similarities end there: Movie fans will know that Skynet ended up ushering in a nuclear holocaust, then invented time travel to ensure its survival.)

The plot thickens in Terminator Genisys as time travel is further mastered by AI. Image Source: Paramount Pictures

Whether we like it or not, weapons utilizing artificial intelligence are getting closer to reality every day—the NSA, in fact, currently uses a surveillance program called Skynet (because why not name something after a world-destroying science fiction antagonist?) that capitalizes on lax privacy laws to collect phone metadata and track the location and call activities of whoever it focuses on. And what’s terrifying about new AI systems such as MonsterMind is that the technology has the potential to turn against us.

“These attacks can be spoofed,” Snowden said in the Wired story. “You could have someone sitting in China, for example, making it appear that one of these attacks is originating in Russia. And then we end up shooting back at a Russian hospital. What happens next?”

For the moment, the supercomputers closest to true AI weigh hundreds of tons, take up thousands of square feet and require a nuclear power plant with giant cooling systems to fuel them, so we’re not likely to see anyone initiate global armageddon from their iPad any time soon. But that hasn’t stopped some of the leading scientific minds from advocating against further military AI research. Stephen Hawking, Elon Musk, and Steve Wozniak are just a few of the scientists who have signed an open letter calling for a ban on offensive autonomous weapons (or in layman’s terms, killer robots). The potential threat is obvious, but how did we get to a point where technology could choose to threaten us?